Tutorials

Tutorial Schedule Updated June 18, 2009

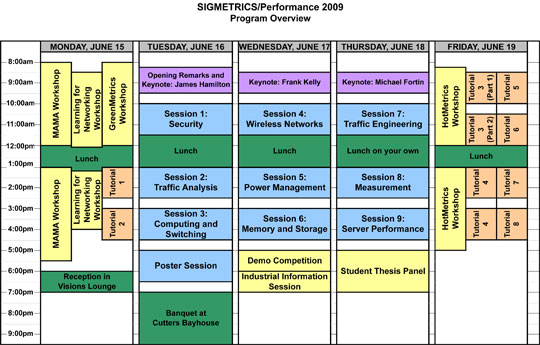

Program Overview [pdf]

Detailed Schedule

| Monday, June 15 | ||

1:00 - 2:30pm |

Tutorial 1: Online Social Networks | |

3:00 - 4:30pm |

Tutorial 2: Internet Measurements: Fault Detection, Identification, and Topology Discovery | |

6:00 - 7:00pm |

Informal Reception in Visions Lounge (top floor of Renaissance Seattle Hotel) |

|

Friday, June 19 - Track 1 |

||

8:30 - 10:00am |

Tutorial 3, Part I: OpenFlow Introduction: What It Is, What You Can Do With It, and How to Get It | |

10:30am-12:00pm |

Tutorial 3, Part II: OpenFlow Interactive Tutorial | |

1:00 - 2:30pm and 3:00 - 4:30pm |

Tutorial 4: What Goes Into a Data Center? | |

Friday, June 19 - Track 2 |

||

8:30 - 10:00am |

Tutorial 5: PNUTS: Yahoo's Cloud Platform for Structured Data | |

10:30am - 12:00pm |

Tutorial 6: Map Reduce Programming Model | |

1:00 - 2:30pm |

Tutorial 7: Amazon's Cloud Computing Efforts | |

3:00 - 4:30pm |

Tutorial 8: Developing and Scaling Web Applications on Google App Engine | |

Tutorial Details

Tutorial 1: Online Social Networks

Krishna Gummadi, Max Planck Institute for Software Systems

Tutorial 2: Internet Measurements: Fault Detection, Identification, and Topology Discovery

Renata Cruz Teixeira, CNRS and UPMC Paris Universitas

Network management tasks such as troubleshooting failures, diagnosing anomalous behavior or monitoring service-level agreements are essential for the performance and availability of IP networks. These tasks are challenging because no single entity (e.g., Internet providers, application service provider, or end-users) controls all elements in an end-to-end path. In addition, the measurement data available to a given entity may not directly report the properties that it needs to know. Hence, network management needs network tomography techniques---the practice of inferring unknown network properties from measurable ones. Network tomography algorithms have been proposed for numerous applications. For example, a network operator can issue end-to-end probes to infer link delays or loss rates, or use reachability measurements to determine the location of faulty equipment; similarly, end-users can combine end-to-end measurements to infer the performance of their Internet provider. However, despite more than ten years of research in network tomography and the clear operational need for these techniques, their deployment so far is limited; mainly because the reality of network monitoring makes it hard to obtain the inputs required by tomography algorithms. For instance, the accurate network topology is not available for end-hosts and continuous monitoring of the status of end-to-end paths is often not feasible because of the high probing overhead. Fortunately, recent advances in network monitoring are bridging the gap to make network tomography practical.

This tutorial will give an overview of the monitoring challenges of network tomography and the techniques that address them. After a brief survey of the different applications of network tomography, we will use fault diagnosis to deepen the discussion. First, we will introduce the binary tomography algorithm, which assumes that links are either good or bad and infer the most likely set of bad links. Then, we will describe recent developments in monitoring techniques and systems that allow measuring the inputs for this algorithm.

Tutorial 3: Part 1: OpenFlow Introduction: What It Is, What You Can Do With It, and How To Get It

Guido Appenzeller and Brandon Heller, Stanford University

The wide proliferation of networks as critical infrastructure has come

as both a blessing and a curse; while networking research today is

more relevant than ever, making an impact is hard. Specifically, there

is almost no practical way to experiment with new network protocols or

innovative packet forwarding hardware in sufficiently realistic

settings (e.g., at scale, carrying real traffic) to gain the

confidence needed for their widespread deployment.

The OpenFlow protocol overcomes these barriers to innovation. The

basic idea is simple: we separate the packet forwarding functions of a

switch from the software that makes the high level routing

decisions. Today OpenFlow has been implemented by Cisco, Juniper, HP,

and NEC, and other vendor implementations are in progress.

In this tutorial, we show how OpenFlow is currently being used for

networking research as well as deployments of innovative protocols and

hardware - in enterprise networks, national backbones, and data

centers. We highlight ongoing research in the areas of security,

mobility, visualization, virtualization, and energy management, with

demos. One demo shows virtual machines migrating across buildings,

states, and continents. Another demo shows a data center network

switching over half a terabit/s of traffic that dynamically adapts to

changing traffic conditions, to minimize its energy consumption. We

also present results from the Stanford pilot deployment.

By the end of the tutorial, each attendee will know what OpenFlow is,

how they can get it, and have ideas for research enabled by it.

Tutorial 3: Part 2: OpenFlow Interactive Tutorial

Guido Appenzeller and Brandon Heller, Stanford University

In this interactive tutorial, each participant will have the

opportunity to complete a hands-on exercise. Everyone will receive a

VM-based computing environment with pre-built OpenFlow reference

switch, controller, and network tools. First, we show how to start up

the reference implementations and tools. Then each participant will

create a simple OpenFlow controller, that when combined with an

OpenFlow switch, acts as a learning Ethernet switch. The presenters

will be on hand to make to answer any questions, and the only required

equipment is a laptop (running any OS).

By the end of this tutorial, each attendee will feel comfortable with

the OpenFlow reference implementations and debugging tools.

Tutorial 4: What Goes Into a Data Center?

Dave Maltz and Albert Greenberg, Microsoft Research

The data centers that implement the web and cloud computing services

on which society increasingly relies are data factories on a scale

rarely seen before. Each of them consumes upwards of 15 megawatts of

power, hosts 50 to 200 thousand servers, and handles trillions of

requests a day from customers around the globe.

This tutorial will provide an overview of the components and systems

that go into a modern mega-data center like those used to host web

search, massive email systems, and dynamically scalable cloud

applications. We'll pay special attention to interplay between these

systems and the costs and requirements that motivate the solutions

used in data centers. Attendees will learn about how data center

applications are structured, the workloads and network traffic demands

they generate, and how this affects the data center's internal and

external network. We will look at the data center network, both

current and newly emerging designs, and identify opportunities for

innovation. Closing the loop, we will examine the techniques used to

manage and provision data center applications, and explore how

measurement, modeling, and incentives could be used to drive data

centers towards their most economically efficient operating points.

Attendees will leave with an understanding of:

- the state of the art in data center components, costs, and systems

- the requirements and economics that drive data center structure

- the types of workloads seen in data centers, and why they arise

- some key challenges to realizing the potential of cloud computing

Tutorial 5: PNUTS: Yahoo's Cloud Platform for Structured Data

Brian Cooper, Yahoo

Tutorial 6: Map Reduce Programming Model

Jelena Pjesivac-Grbovic and Jerry Zhao, Google

Inspired by similar concepts in functional languages dated as early as

60's, Google first introduced MapReduce in 2004. Now, MapReduce has

become the most popular framework for large-scale data processing at

Google and it is becoming the framework of choice on many

off-the-shelf clusters.

In this tutorial, we first introduce the MapReduce programming model,

illustrating its power by a few examples. We discuss the MapReduce

model and its relationship to MPI and DBMS. Performance and

scalability are key features of the Google MapReduce implementation

and we will discuss a few techniques used to achieve this goal.

Google MapReduce exploits data locality to reduce network overhead.

We utilize different scheduling techniques to ensure a job is

progressing in the presence of variable system load. Finally, since

failures are common in our computing environment, we provide a number

of failure avoidance and recovery features to ensure the job

completion in such environment.

Tutorial 7: Amazon's Cloud Computing Efforts

Jeffrey Barr, Amazon

This session will start with a review of Amazon's line of scalable infrastructure web services including the Elastic Compute Cloud (EC2), the Simple Storage Service (S3), and the Simple Database (SDB). After the review we'll log in to an Amazon EC2 instance and dive deep into

some genuine PHP code. We'll see how to access S3 and SimpleDB, and how to start up and manage EC2 instances programatically. At the end of this tutorial you will be ready to build your own AWS-powered applications.

Tutorial 8: Developing and Scaling Web Applications on Google App Engine

Chris Beckmann, Google

Learn how to create great web applications quickly on Google App

Engine using the Django web framework and the Python language. Google

App Engine lets you host complete, scalable web applications written

in Python with minimal fuss. This tutorial assumes basic familiarity

with Python but definitely no advanced Python knowlege; Django

experience is optional. You will learn how to use the Django web

framework with the datastore API provided by Google App Engine, and

how to get the most mileage out of the combination. In this session

we'll also cover techniques you can use to improve your application's

performance when you surpass a simple application size. We'll discuss

Python runtime tricks, various types of caching, dynamic module

loading, and App Engine Python idioms. We will also cover common

strategies for scaling web applications to millions of users.

Reception Information

On Monday evening from 6:00 PM – 7:00 PM, there will be an informal reception in the Visions Lounge on the top floor of the Renaissance Seattle Hotel. The room has a wonderful view, and we will have a cash bar with a bartender on hand.